Video-R2

Github: https://github.com/mbzuai-oryx/Video-R2

Paper: https://arxiv.org/abs/2511.23478

Overview

Video-R2 is a video reasoning multimodal language model (MLLM) designed to produce consistent, temporally grounded, and visually faithful reasoning over dynamic video content.

It addresses two common failure modes of prior video reasoning models:

- Logical inconsistency between reasoning and final answers

- Over-reliance on linguistic priors instead of video evidence

In the paper, we propose two diagnostic metrics to address these issues:

- Think-Answer Consistency (TAC): alignment between the generated reasoning and the final answer.

- Video Attention Score (VAS): extent to which the model's reasoning relies on video evidence rather than linguistic priors or world knowledge.

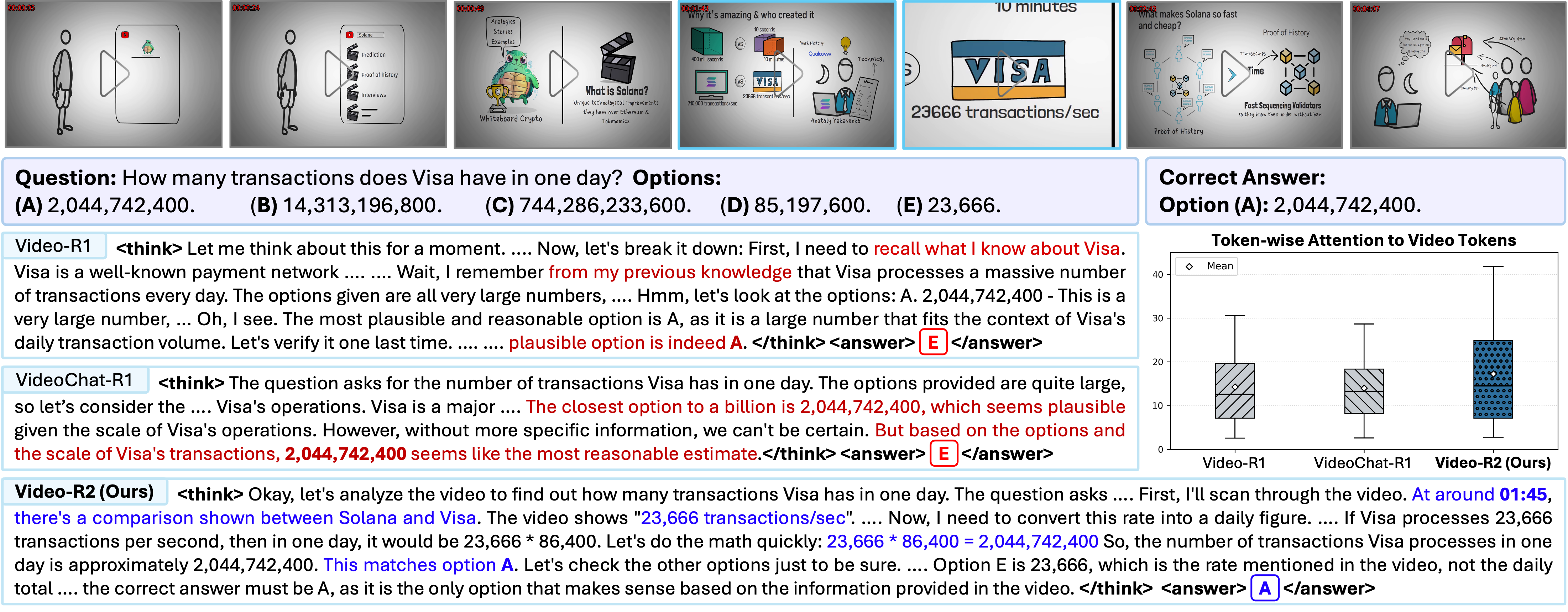

Inconsistent reasoning in prior video LLMs and improved visual reliance with Video-R2.

Given the video and the question “How many transactions does Visa have in one day?” both Video-R1 and VideoChat-R1 conclude option A during their reasoning but ultimately predict option E, showing that the model’s reasoning and final answer do not match. This behavior occurs because these models rely heavily on textual context and prior knowledge while attending weakly to the video. In contrast, Video-R2 correctly identifies the on screen visual cue at 01:45 (“23,666 transactions/sec”), performs temporal conversion, and arrives at the correct daily value. The box plot on the right shows the average attention from generated tokens to video tokens across all attention heads in the final transformer layer. Compared with baselines, Video-R2 assigns higher and more distributed attention to video tokens, indicating stronger and more adaptive visual reliance. While earlier models often produce plausible yet inconsistent reasoning, Video-R2 reasons coherently and grounds its decisions in actual video evidence.

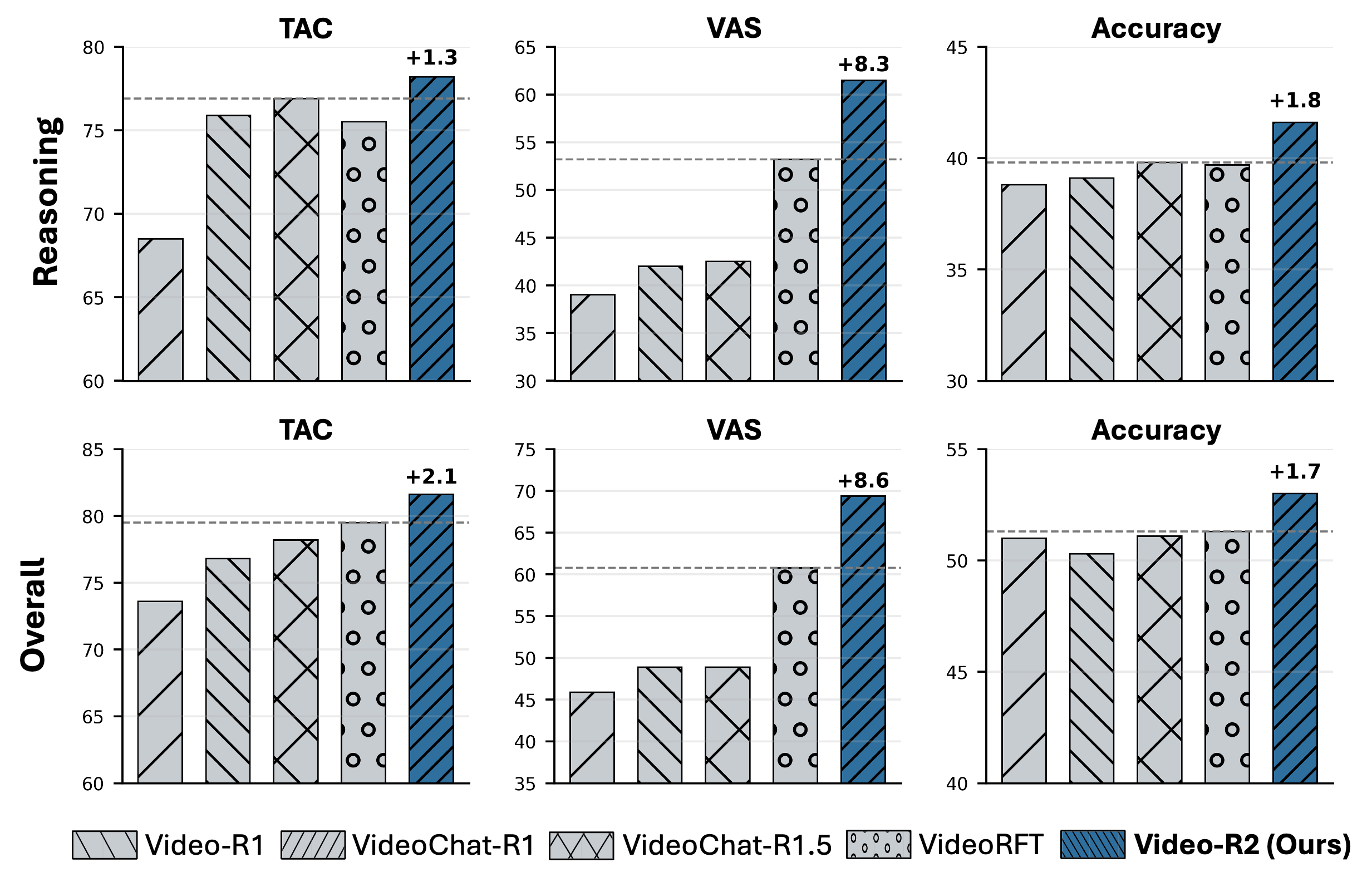

Comparison of Video-R2 with recent video reasoning models, Video-R1, VideoChat-R1/1.5, and VideoRFT, across three metrics: TAC (Think–Answer Consistency), VAS (Video Attention Score), and Accuracy.

The upper row reports average scores over six reasoning benchmarks, VideoMathQA, Video-MMMU, MMVU, VSIBench, MINERVA, and SciVideoBench, while the lower row shows averages over all 11 benchmarks including the five generic ones, MVBench, VideoMME, TempCompass, MLVU, and LongVideoBench. Video-R2 performs better across both reasoning and overall evaluations, achieving higher consistency (TAC) and video-focused reasoning

(VAS) while maintaining competitive accuracy.

Key Ideas

Video-R2 combines two post-training stages:

- Timestamp-aware supervised fine-tuning (SFT) to encourage explicit temporal grounding

- Group Relative Policy Optimization (GRPO) to reinforce consistency and video reliance

Training Summary

- Base model: Qwen2.5-VL-Instruct (7B)

- Stage 1: Timestamp-aware SFT

- Stage 2: GRPO with Temporal Alignment Reward (TAR)

- Training Dataset: MBZUAI/Video-R2-Dataset

For full training details, see the GitHub repository: https://github.com/mbzuai-oryx/Video-R2

Evaluation

Video-R2 is evaluated on 11 benchmarks:

- 5 general benchmarks (MVBench, VideoMME, TempCompass, MLVU, LongVideoBench)

- 6 reasoning-focused benchmarks (VideoMathQA, Video-MMMU, MMVU, VSIBench, MINERVA, SciVideoBench)

Please refer to https://github.com/mbzuai-oryx/Video-R2/tree/main/eval for details.

Usage

A gradio demo is provided at: https://github.com/mbzuai-oryx/Video-R2/tree/main/demo

cd demo

python gradio_demo.py --ckpt MBZUAI/Video-R2 --port 7860

Citation ✏️

If you find Video-R2 helpful, please cite:

@article{maaz2025video-r2,

title={Video-R2: Reinforcing Consistent and Grounded Reasoning in Multimodal Language Models},

author={Maaz, Muhammad and Rasheed, Hanoona and Khan, Fahad Shahbaz and Khan, Salman},

journal={arXiv preprint arXiv:2511.23478},

year={2025}

}

- Downloads last month

- 15