tags:

-

ai-safety

-

physical-ai

-

action-modeling

-

explainable-safety

-

human-in-the-loop

Author’s Note — Why This Document Exists

ISE (Intent–State–Effect) Model: Isolating Judgment for Cost Optimization and Explainable Safety

For a long time, many systems have assumed that if enough sensing data is fed into AI, intent will naturally emerge on its own.

In practice, this belief has resulted in exploding token costs, delayed responses, and opaque system structures in which responsibility cannot be clearly identified when accidents occur.

The problem is not a lack of intelligence.

It lies in the failure to clearly separate observed data (State) from the basis for execution (Intent) at the design stage.

The ISE model was designed to structurally isolate judgment.

A physical action must go beyond recording a log of what happened; it must allow us to trace and audit why it was permitted.

“Should a sprinkler be activated when a fire alarm goes off?”

Answering this question is the role of a system’s judgment structure.

Intent–State–Effect: A Minimal Interaction Model

– The world is not a collection of states.

1. Purpose of This Document

This document explicitly separates three concepts that must be clearly distinguished when a system interacts with the physical world.

- Sensors observe physical events

- Actions intentionally cause physical events

- Intent does not exist in sensors and belongs solely to the domain of action approval and responsibility judgment

- Not all Physical Events are subjects of judgment

The purpose of this document is to present a minimal model for reasoning about physical interaction.

2. Intent–State–Effect: The Minimal Interaction Model

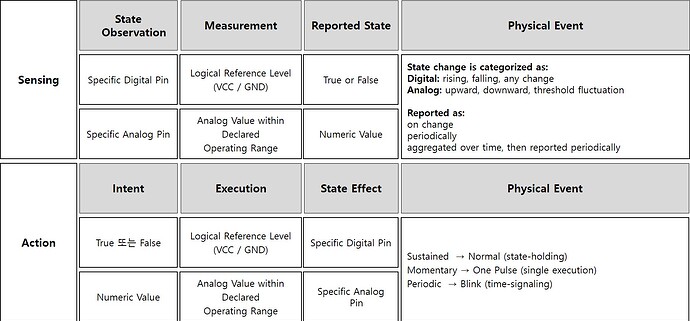

This table defines a minimal interaction model that describes how a system interacts with the physical world, independent of implementation.

This model separates interaction into the following three independent domains:

- State

- Intent

- Effect

Effect, in turn, causes changes in the physical world, and those changes become the cause of new State.

Through this, the model clarifies:

- how physical signals are observed,

- how they are executed, and

- where judgment and responsibility arise.

The purpose of this model is to provide a stable framework for understanding physical interaction.

3. Scope and Terminology Boundaries

The key terms used in this document are defined as follows:

Physical Event

An event that occurs in or is observed from the physical world

State

A physical condition observed by the system

Measurement

The act of reading the electrical state left on a circuit by a physical effect and expressing it as a value

Intent

A decision that approves execution

Execution

The act of applying Intent to the physical world

Effect

The physical result produced by execution

World Baseline

The foundational assumptions of the world managed outside the system

These distinctions are conceptual and independent of implementation.

4. State Observation

4.1 Definition of State

State refers to the result observed through Measurement.

- State is not a command

- State is not a judgment

- State is observable, recordable, and reportable

State is a description of the world, not an element that instructs or authorizes action.

This document aims to return State to its proper place.

State serves the following roles:

- Observes and describes the world

- Provides evidence of change

- Can be recorded, aggregated, and reported

However, State does not:

- Approve execution

- Instruct action

- Generate responsibility

Using State as an input to judgment is different from mistaking State itself as judgment.

What this document distinguishes is not the existence of State, but the authority of State.

4.2 Observation Sources

State is obtained through the following observation sources:

- Specific digital pins

- Specific analog pins

These observations do not change the world.

They only read physical results that have already occurred.

4.3 Observation Values

- Digital: Logical reference levels (VCC / GND)

- Analog: Analog values within a declared operating range

These values are not meaning.

They are Measurement results that may be used for interpretation.

4.4 Why State Machines Fail Here

State machines were highly effective models in the linguistic and digital logic domains.

4.4.1 Why State Machines Worked in the Linguistic Domain

- State itself was a unit of meaning

- State transitions were mostly caused by intentional inputs

- Incorrect transitions were reversible

- Failure costs were largely limited to logical errors

In other words, state changes closely aligned with action intent.

4.4.2 Why State Machines Cause Misinterpretation in the Physical World

In the physical world, State is different.

- Most state changes do not include intent

- They are caused by environment, noise, delay, and inertia

- State changes may be the result of actions, but are not grounds for approving action

- Incorrect transitions create physically irreversible effects

Applying state machines directly leads to the following misconceptions:

- State change = execution condition

- Observed result = action approval

- State-based transition = responsibility-free execution

As a result, unintended Physical Events are mistaken for Actions, and responsibility boundaries become blurred.

4.4.3 The Choice of the Intent–State–Effect Model

This model does not deny state machines.

It limits their scope.

- State machines are valid for technical management within State

- Approval of Execution and responsibility judgment are not within the domain of state machines

The Intent–State–Effect model permits Execution based on the existence of Intent, not on state transitions.

This does not replace state machines; it declares the boundary they must not cross.

5. State Change Categorization

State is defined not merely by value, but by how it changes over time.

This categorization is not for interpreting State, but for determining when State becomes a reportable Event.

In other words, categorizing state changes defines the reporting moment.

5.1 Digital State Changes

Digital state changes are classified as:

- Rising: Low → High

- Falling: High → Low

- Any change: All transitions

These changes define when State becomes reportable according to declared conditions.

5.2 Analog State Changes

Analog state is not classified by continuous raw values, but by moments when declared criteria are crossed.

- Rising: Crossing a declared threshold upward

- Falling: Crossing a declared threshold downward

- Threshold fluctuation: Changes around a threshold

The threshold is not a sensor property.

It is a declared criterion defined by the system or designer to classify meaning and reporting moments.

This classification is not intended to stream continuous raw values into judgment, but to fix reporting moments as discrete events.

6. State Reporting Modes

Observed State may be reported according to system requirements based on predefined reporting moments:

- Event-based Reporting

Report only when defined state changes occur. - Periodic Reporting

Report the current State at fixed intervals regardless of change. - Aggregated Reporting

Treat accumulated change, frequency, or duration trends as a single State representation.

Aggregated reporting prevents every moment from becoming a judgment target and provides more stable observation units.

7. Intent

Intent is a decision that authorizes action execution.

7.1 Characteristics of Intent

- Has a boolean nature (allowed / not allowed)

- Independent of its source

- Separated from state observation

This model does not concern itself with where Intent comes from.

It defines only how Intent participates in execution.

Intent is not a value; it is a judgment of executability.

8. Execution

Execution is the act of transforming Intent into a physical effect.

- Execution does not interpret State

- Execution does not decide Intent

- Execution only applies Intent to the physical world

Unlike Measurement, Execution presupposes Intent.

Execution is not the subject of judgment; judgment must already be completed before Execution.

9. Effect (Action Output)

Effect refers to the actual result left in the physical world by Execution.

9.1 Minimal Classification of Output Types

Output actions are semantically classified into three basic types:

- Sustained Output (Normal)

Output that persists until changed - Momentary Output (One Pulse)

A short output representing a single action or trigger - Periodic Output (Blink)

Output where repetition and timing carry meaning

These three form a complete and minimal set of output meaning units.

Not all systems must implement all three identically.

Periodic output is often derivable as a temporal composition of sustained or momentary outputs.

PWM, sequences, and waveforms are parameterizations or combinations, not new meaning types.

Time can be meaning, but does not always need to be independent meaning.

10. Goals and Action Purpose

Target values (e.g., target temperature) define what an action aims toward.

Target values represent:

- Action purpose

- Accuracy and completion conditions

- Convergence or termination criteria

Target values do not:

- Approve execution

- Trigger actions

- Replace judgment

Target values have meaning only when an approved Intent already exists.

Execution applies these target values to the physical world.

11. Physical Events: Sensing vs Action

Both sensing and action deal with Physical Events.

However, not all Physical Events carry the same meaning or responsibility.

- Sensing observes Physical Event results via Measurement

- Action intentionally generates Physical Events

Sensing does not create change and thus carries no Intent or responsibility.

Action presupposes Intent and creates irreversible changes in the physical world.

12. Physical Events Without Intent

Events that cause real physical changes without Intent are treated only as Sensing targets, not Actions.

12.1 Types of Events Without Intent

- Naturally occurring changes

- Unintentional human intervention

- Passive physical system responses

- External actor intervention

- Faults and malfunctions

- Delayed or residual effects

12.2 Protection Boundaries and Stability

Unintentional events are generally Sensing targets, but those that threaten system stability must be immediately isolated or reported within protection boundaries.

12.2.1 Self-Preservation

When unintentional events exceed allowed physical or electrical limits, they become stability issues, not observation issues.

12.2.2 Preventing State–Action Feedback Loops

Out-of-range unintentional changes must not be used as judgment inputs.

They must be handled by separate protection logic, not as signals triggering Intent or Execution.

12.3 Repeated Observation and World Baseline

When unintentional events exhibit patterns or repeated observation, the system must treat them as baseline world behavior using an externally defined World Baseline.

This baseline is not fixed and may be continuously validated and updated by external judgment entities (users, policy engines, or higher systems) based on aggregated observation.

World Baseline is descriptive. It does not approve Execution.

12.3.1 Environmental Context

Physical events repeatedly caused by climate, season, weather, location, or spatial constraints are components of the World Baseline.

12.3.2 Human Context

Repeated physical events observed through user habits, routines, and interaction patterns are also part of the World Baseline.

13. Emergency Stop

Emergency Stop is not part of the Intent, State, or Execution judgment model.

It is a physically enforced cutoff applied externally to the system.

13.1 Logical Independence

Emergency Stop operates regardless of Intent presence or Execution legitimacy and bypasses all judgment processes.

13.2 Energy Cut-Off

Emergency Stop does not command “stop all actions” — it physically removes energy, eliminating the possibility of Effect.

13.3 Scope Boundary Declaration

Therefore, Emergency Stop is excluded from this model, not due to lack of intent, but to clearly declare the boundary judgment cannot reach.

14. Three Minimal Axioms of This Model

Axiom 1 — Not all physical events require judgment

Observation is not decision.

Axiom 2 — Intent belongs to action, not sensing

Where Intent exists, responsibility exists.

Axiom 3 — Judgment is required only when responsibility arises

Without responsibility, there is no judgment.

15. Conclusion

More important than the ability to call the physical world is the boundary that allows us to stop that call.